Student Partnership Assessment

Method

This systematic review was conducted according to the standard steps in a systematic review process: formulating the research questions, constructing inclusion and exclusion criteria, developing search strategy, screening studies using selection criteria, extracting data, assessing quality and relevance, and synthesizing results to answer the research questions (Gough 2007). The previous section explains the conceptual framework adopted in this study and how the research questions were derived from the conceptual framework. As Sih, Pollack, and Zepeda (2019) emphasize, systematic review questions should be constructed around a sound conceptual framework. The procedure of the review is described in the following subsections.

Inclusion and exclusion criteria

Five criteria were devised for the selection of articles (see Table S1). This review aims to explore how student partnership is enacted in assessment communities of practice; therefore, for a publication to be included, it must fulfil the primary criterion of being an empirical study. The rationale for using empirical studies is to enable synthesis of results that is based on collaborative efforts which have been implemented and trialed in reality in order that practice-based conclusions can be drawn. The empirical studies included consist of quantitative, qualitative, and mixed methods research, whereas all theoretical and conceptual papers were excluded from the review.

| Inclusion criteria | Exclusion criteria | Rationale |

| English language | Languages other than English | A lack of language resources |

| Published between 2000 and March 2022 | Publications before 2000 | Consistence with the trend and development of student partnership in assessment literature |

| Empirical studies | Theoretical/conceptual papers | To provide practice-based conclusions |

| Assessment activities | Non-assessment related activities | Relevance to the topic of the review |

| Higher education context | Contexts other than higher education, e.g., primary education, secondary education, professional training | Relevance to the topic of the review |

| Evidence of student-staff collaboration | Participatory practices without student-staff collaboration | In accordance with the definition of partnership adopted in the review |

As the foci of this review are assessment-related activities that involve student partnership and the roles students adopt in partnership, the selected studies must provide sufficiently clear information for such data to be extracted. In addition, there must be clear evidence of collaboration between students and academic staff particularly in the description of the research to qualify a study as empirical work on student partnership. Studies that examined participatory practices where students and staff perform a task without engaging in dialogue and negotiation would not be included in this review. This criterion is in accordance with the definition of partnership as a collaborative and reciprocal process explained in the previous section.

Literature search

The literature search process consisted of three parts. First, searches were performed in four major electronic academic databases, namely, ERIC, Web of Science, Scopus, and PsycInfo, which host a large number of academic journals covering broad disciplinary areas. Three groups of keywords consisting of the main concepts in the systematic review topic—“student partnership”, “assessment”, and “higher education”—as well as their synonyms and associated terms were used to scan titles and abstracts for relevant articles (see Table S2). Although the notion of student-teacher collaboration in assessment dated back to the 1980s (Boud and Prosser 1980; Falchikov 1986), it began to gain widespread attention in the 1990s (e.g., Birenbaum 1996; Towler and Broadfoot 1992) with the term “partnership” coming into use in late 1990s (Stefani 1998). Therefore, the time period parameter was set to search for articles published between 2000 and 2022. Due to a lack of language resources to support translation work, the literature searches were limited to articles published in English only.

| Concept | Search terms |

| Student partnership | “student partnership” OR “student-staff partnership” OR “student-faculty partnership” OR “students as partners” OR “student-staff collaboration” OR “student voice” OR “student engagement” |

| Assessment | “assessment” OR “evaluation” OR “assessment for learning” OR “assessment as learning” OR “co-assessment” OR “co-creation” OR “self-assessment” OR “peer assessment” |

| Higher education | “higher education” OR “university” OR “college” OR “postsecondary” OR “tertiary” |

The second search process was manual searches using the same parameters in the web search engine, Google Scholar, and two online journals, International Journal for Students as Partners and Teaching and Learning Together in Higher Education. These two journals were chosen due to their prominent focus on students and staff collaborative work. As Google Scholar is less able to handle complex searches compared to databases, it was used as a secondary method to complement database searches. The search string (“student partnership” AND “assessment” AND “higher education”) was used to search for articles published between 2000 and 2022, excluding patents. About 1,520 results were returned. As most of the results were similar to the database search results, only the first 100 results were screened. Another search strategy was backward reference searching which involved examining the reference lists and works cited in relevant articles and background reading materials to identify other relevant studies. In all three search processes, both peer-reviewed and non-peer reviewed publications were included as non-peer reviewed publications such as book chapters, reports, and conference proceedings might also be relevant to the purpose of this study.

Study selection

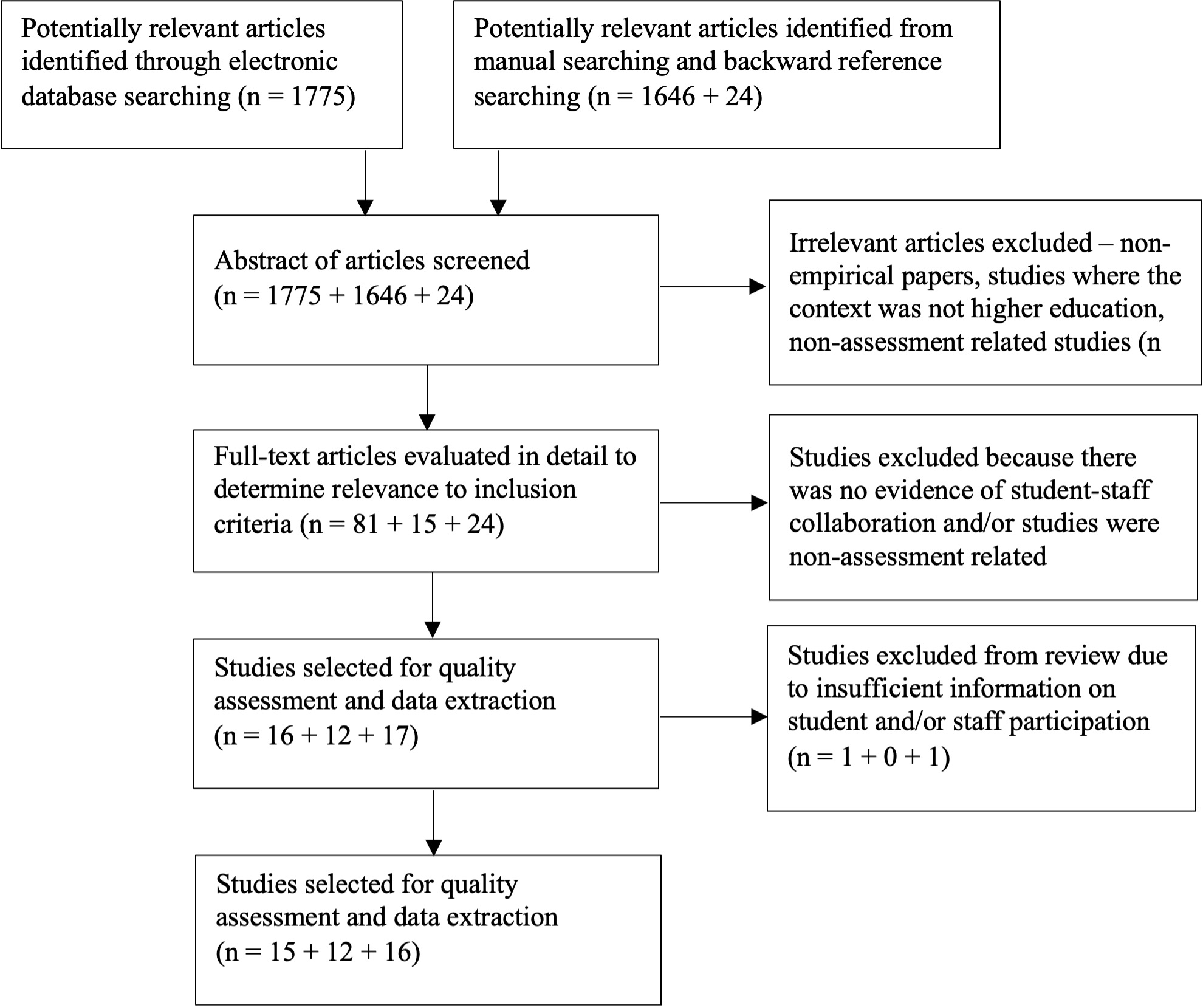

The database searches yielded 1,775 results, whereas manual and backward reference searching identified 1,670 potential articles (see Table S3). The abstracts of the articles were screened against the third, fourth, and fifth inclusion and exclusion criteria in Table S1 (the first two criteria had been applied through search filters). If the context of the study—whether it was assessment related and whether it was conducted in a tertiary setting—was not clearly stated in the abstract, the article would be included so that the criteria could be evaluated in full-text review. After abstract screening, 120 articles were selected for full-text review. Then, each of the selected articles was read thoroughly to determine its suitability against the last criterion in Table S1 – whether there was evidence of student-staff collaboration in the study reported, and any previous criteria if necessary, resulting in further elimination of 75 articles. In total, 45 empirical studies were selected to undergo the subsequent stage of quality assessment and data extraction – 16 from database searches, 12 from manual searches, and 17 from backward referencing searching. The steps taken to select relevant publications are shown in a flow diagram in Figure S1.

| Database | Search string | Field searched | Search results |

| ERIC | AB ( “student partnership” OR “student-staff partnership” OR “student-faculty partnership” OR “students as partners” OR “student-staff collaboration” OR “student voice” OR “student engagement” ) AND AB ( “assessment” OR “evaluation” OR “assessment for learning” OR “assessment as learning” OR “co-assessment” OR “co-creation” OR “self-assessment” OR “peer assessment” ) AND AB ( “higher education” OR “university” OR “college” OR “postsecondary” OR “tertiary” ) | Abstract | 193 |

| PsycInfo | ab(“student partnership” OR “student-staff partnership” OR “student-faculty partnership” OR “students as partners” OR “student-staff collaboration” OR “student voice” OR “student engagement”) AND ab(“assessment” OR “evaluation” OR “assessment for learning” OR “assessment as learning” OR “co-assessment” OR “co-creation” OR “self-assessment” OR “peer assessment”) AND ab(“higher education” OR “university” OR “college” OR “postsecondary” OR “tertiary”) | Abstract | 83 |

| Scopus | ABS ( “student partnership” OR “student-staff partnership” OR “student-faculty partnership” OR “students as partners” OR “student-staff collaboration” OR “student voice” OR “student engagement” ) AND ABS ( “assessment” OR “evaluation” OR “assessment for learning” OR “assessment as learning” OR “co-assessment” OR “co-creation” OR “self-assessment” OR “peer assessment” ) AND ABS ( “higher education” OR “university” OR “college” OR “postsecondary” OR “tertiary” ) | Abstract | 967 |

| Web of Science | ((AB=(“student partnership” OR “student-staff partnership” OR “student-faculty partnership” OR “students as partners” OR “student-staff collaboration” OR “student voice” OR “student engagement”)) AND AB=(“assessment” OR “evaluation” OR “assessment for learning” OR “assessment as learning” OR “co-assessment” OR “co-creation” OR “self-assessment” OR “peer assessment”)) AND AB=(“higher education” OR “university” OR “college” OR “postsecondary” OR “tertiary”) | Abstract | 532 |

| Total | 1,775 | ||

Quality assessment and data extraction

In systematic synthesis of research, it is important to assess the quality and relevance of the data obtained from the selected studies. Gough (2007) contends that since questions and foci differ from one systematic review to another, the standards for judging the quality of included studies should comprise both generic and review-specific criteria. The quality assessment tool used in this systematic review was devised with such a goal in mind – to evaluate the general robustness of the research procedure adopted and the trustworthiness of the findings in a selected study, while also to assess whether the selected study was suitable for answering the systematic review questions. The assessment tool consisted of two parts with four questions each (see Table S4). Part 1 contained generic criteria adapted from Hong et al.’s (2018) Mixed Methods Appraisal Tool; Part 2 was designed based on the aim and research questions of this systematic review. For a study to be deemed fit for the purpose of this review, it must fulfil:

- criteria 1.1, 2.1, and 2.2; and

- at least two of the following criteria from Part 1: 1.2, 1.3, and 1.4; and

- at least one of the following criteria from Part 2: 2.3 and 2.4.

Table S4. Quality assessment tool

| Dimension | Quality criteria |

| 1. Generic assessment | 1.1. Are the research objectives/questions clearly stated? 1.2. Is the method of data collection appropriate? 1.3. Is the method of data analysis explained? 1.4. Are the conclusions drawn from the results/findings of the study? |

| 2. Review-specific assessment | 2.1. Is student assessment a focus of the study? 2.2. Is the collaborative relationship between students and staff made clear? 2.3. Are students’ activities in the partnership clearly described? 2.4. Are staff’s activities in the partnership clearly described? |

Data extraction was performed concurrently with quality assessment using an Excel worksheet. The data extracted include descriptions of individual studies, such as context, year of study, methods, and participants; as well as information related to the review questions, for example, assessment activities, students’ actions/responsibilities, and staff’s actions/responsibilities. Both researchers discussed and agreed on the template before undertaking data extraction independently using the same template. Some of the studies appeared to be relevant at the screening stage but were found to be unsuitable for the purpose of the review upon closer examination. Two studies were excluded because there was insufficient information on student and staff participation for the analysis of assessment partnership. Hence, after quality assessment and data extraction, 43 studies were found to be suitable for the purpose of this review, and the extracted data from these studies were synthesized to answer the review questions. The characteristics of the 43 included studies are presented in Table S5.

Result synthesis

The data were analyzed using Braun and Clarke’s (2006) theoretical thematic analysis approach. The extracted data were organized according to the three research questions in an Excel spreadsheet. Each set of data were examined separately, and an initial code was assigned to describe each data segment. After all the data segments were coded, they were read thoroughly and examined for patterns. Codes that were associated with one another or related to the same issue were grouped together. For example, “create and refine assessment tools/tasks”, “create supporting resources for staff and students”, and “prepare answers for designed tasks” were codes for the second research question on students’ roles. All three codes concerned the action of developing something, so they were put in the same group. A label was then assigned to describe the group of codes. Using the same example for students’ roles, the label assigned was “co-designers”. After all the three sets of data were coded and grouped into categories, the categories were reviewed to inspect whether all the codes were adequately explained by the categories and whether all the data segments fit the codes and categories. Both researchers performed the analysis independently and then met to compare their codes and categories. Discrepancies were resolved through negotiation and discussion. There were 51 codes grouped into 12 categories, which were further organized into three themes to answer the research questions. The naming of the three themes was guided by the research questions. The findings are reported according to the three themes: areas of partnership, roles of students, and support provided by university staff.